“Where there is life, there is hope”

Backpropagation Objectives

Backpropagation algorithm is commonly used in updating parameters in neuron nets, which helps improving the model performance greatly.

Popular deep learning framework, like PyTorch, Tensorflow, already embeded backpropagation algorithm into their neuron nets.

The base mechanism of backpropagation is Chain-rule and Partial Derivative

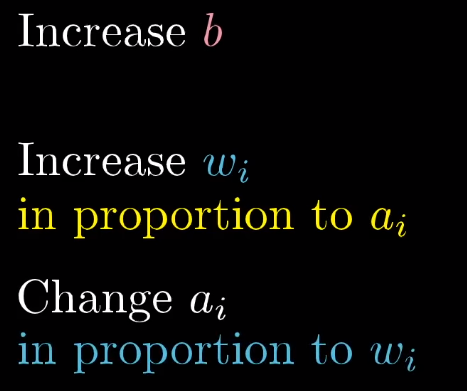

Backpropagation aims to find which weight/bias/activation function has the relatively larger influence on minimize the cost and update them correspondingly.

Detailed Explain

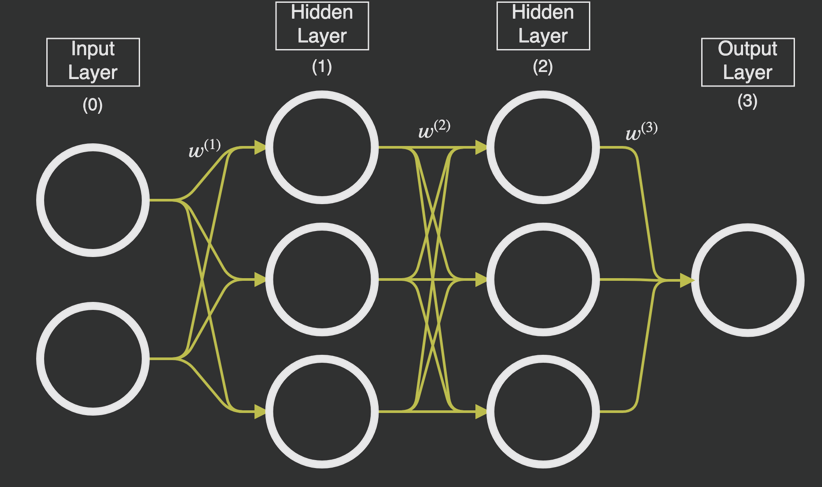

Suppose two hidden layers MLP:

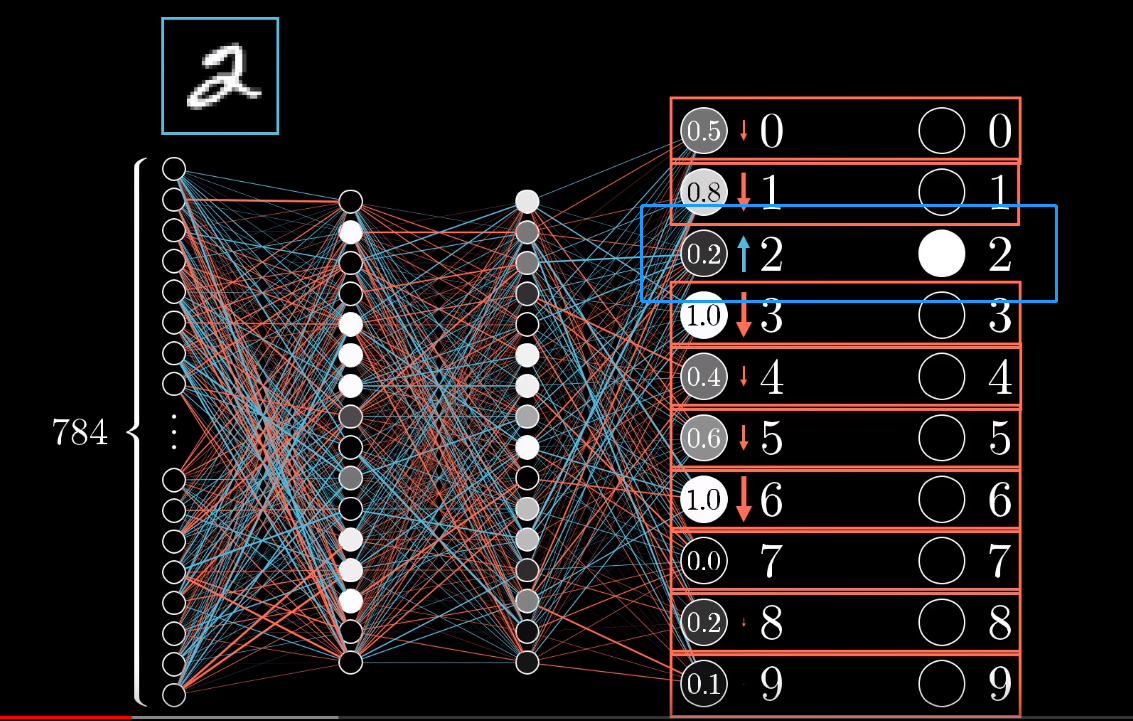

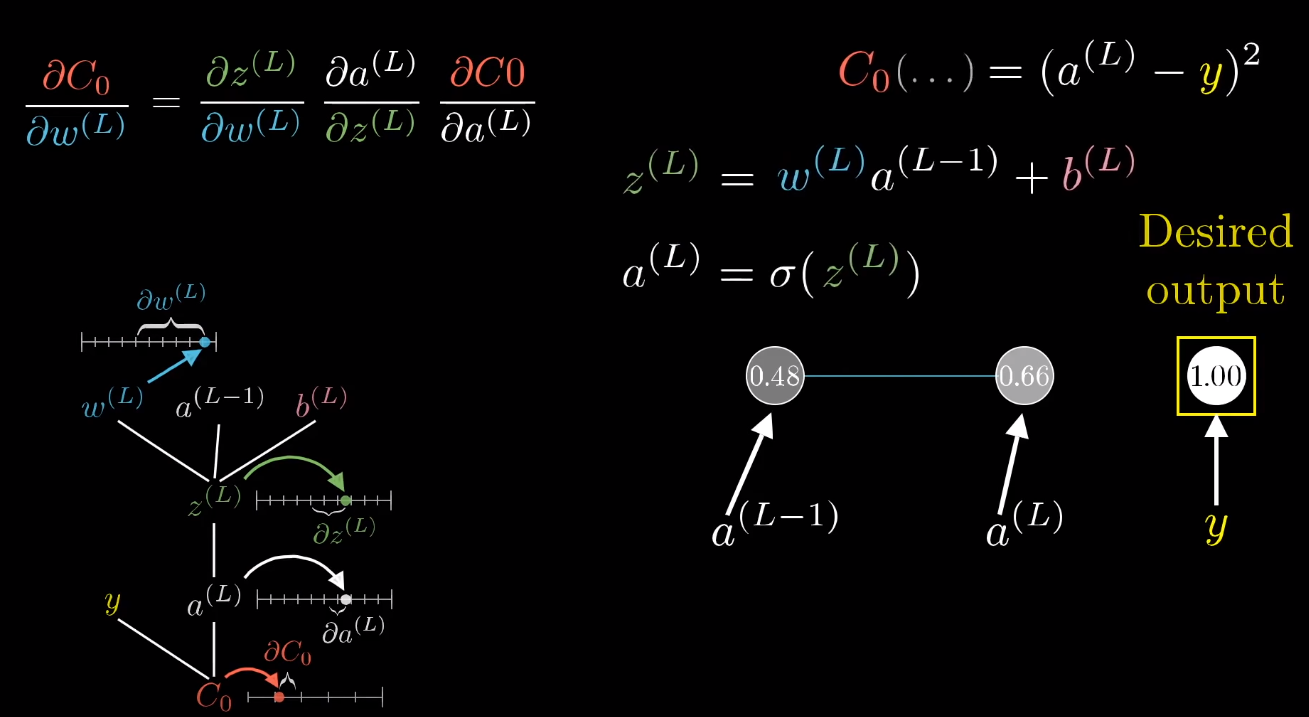

For example (the last layer):

\[Cost = a^{L} - y\] \[a^L = \sigma(z^L)\] \[z^L = w^La^{(L-1)} + b^L\]

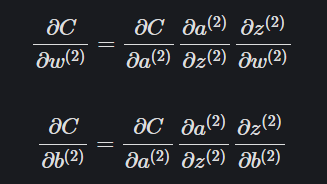

This is the cost – weight/bias of hidden layer 2, to calculate the ratio between the weights (and biases) and the cost function. The ones with the largest ratio will have the greatest impact on the cost function and will give us ‘the most bang for our buck’.

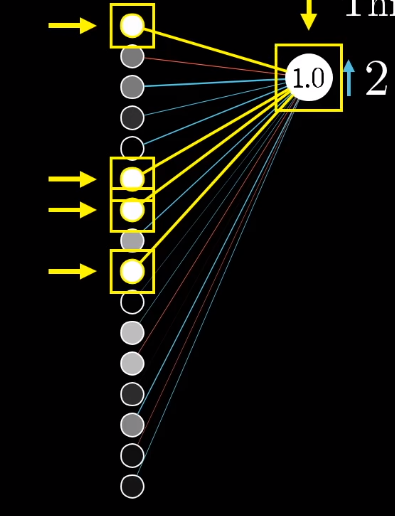

Ex. We want to increase the prob to classify it into 2, we would like to know which neuron’s weight/bias/activation has larger influence so that we can adjust them to get our desired output - 2, such as the yellow line.

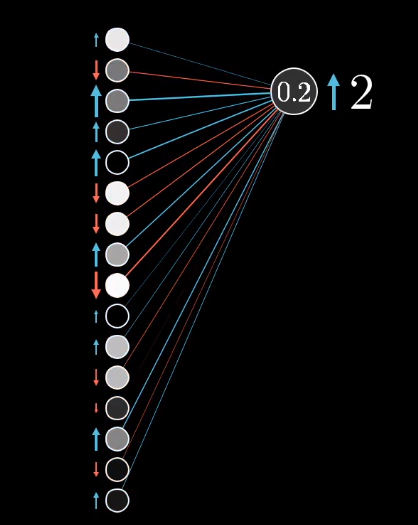

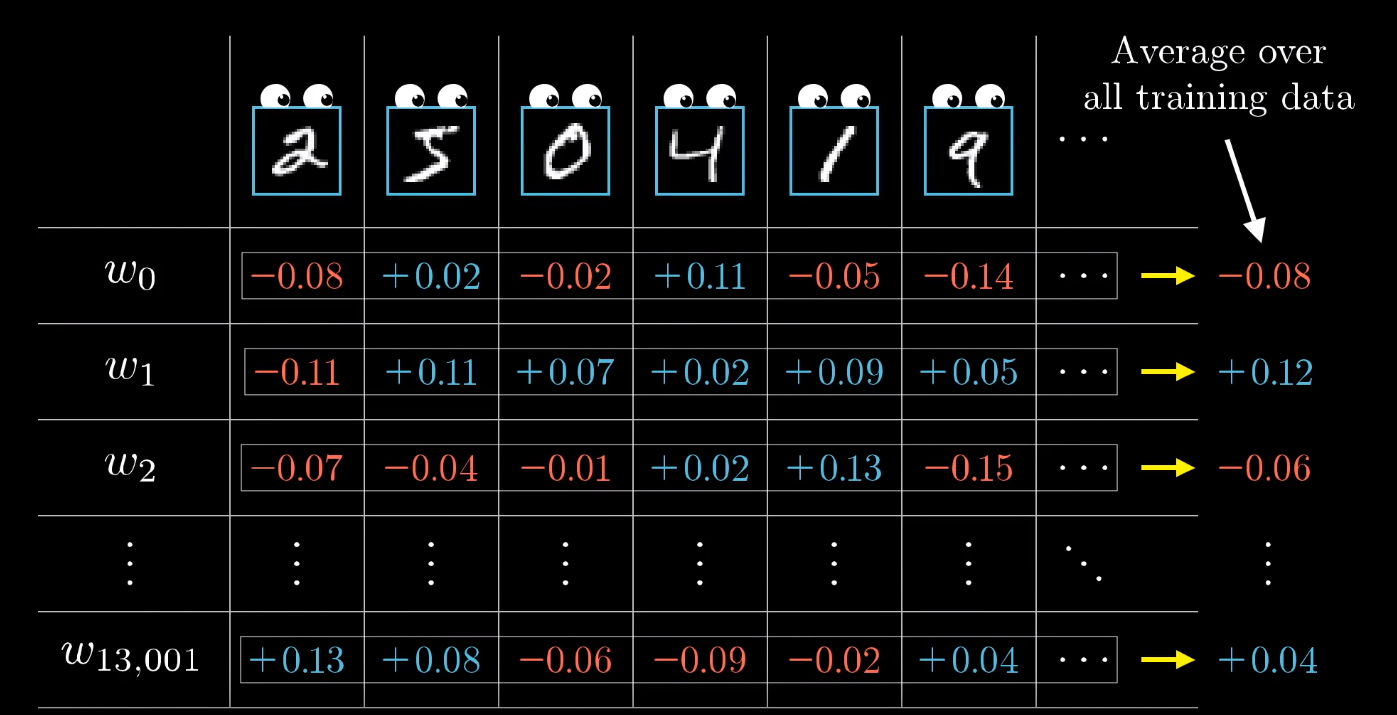

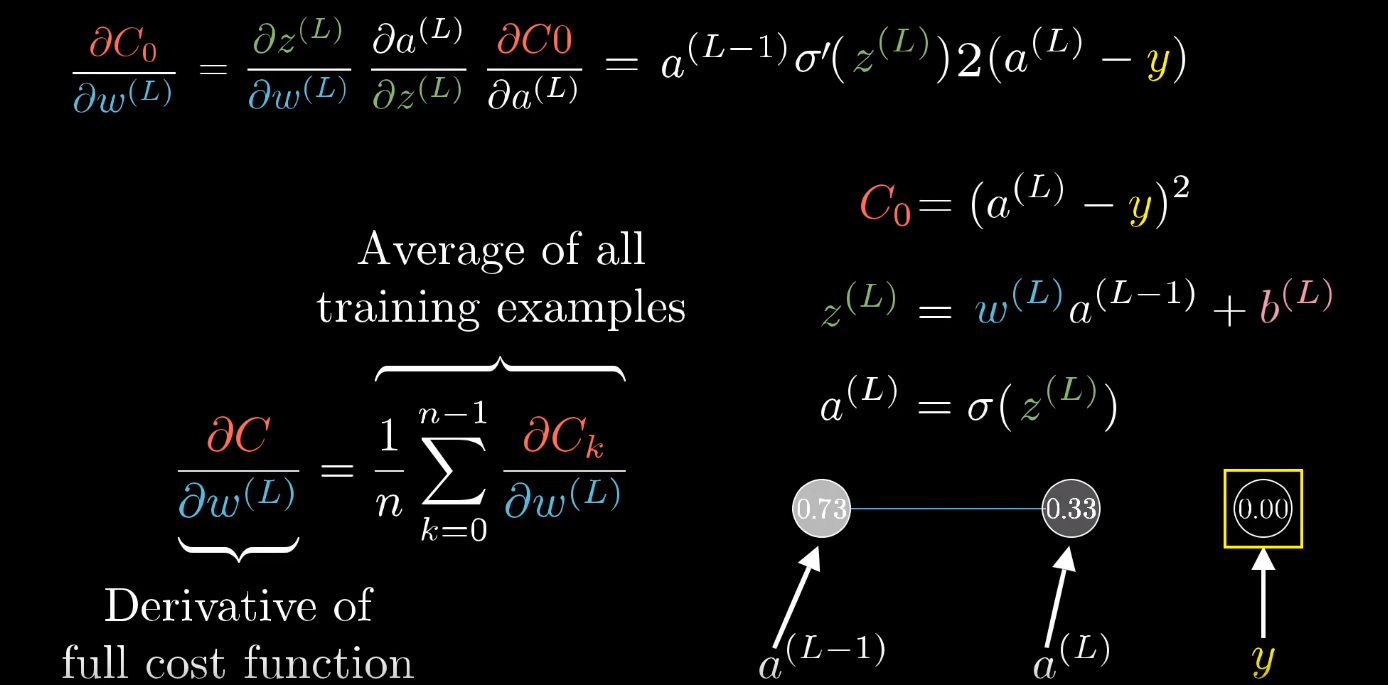

We will have to average the changes

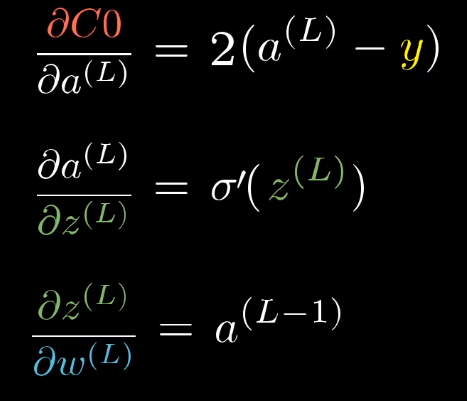

Math formula

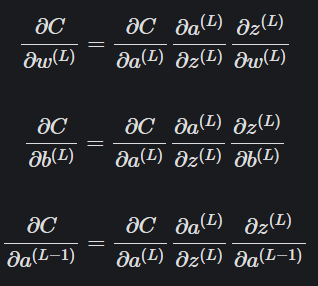

(output – the last hidden layer):

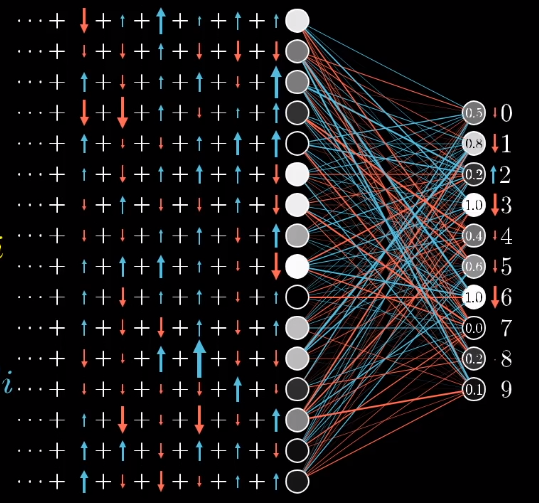

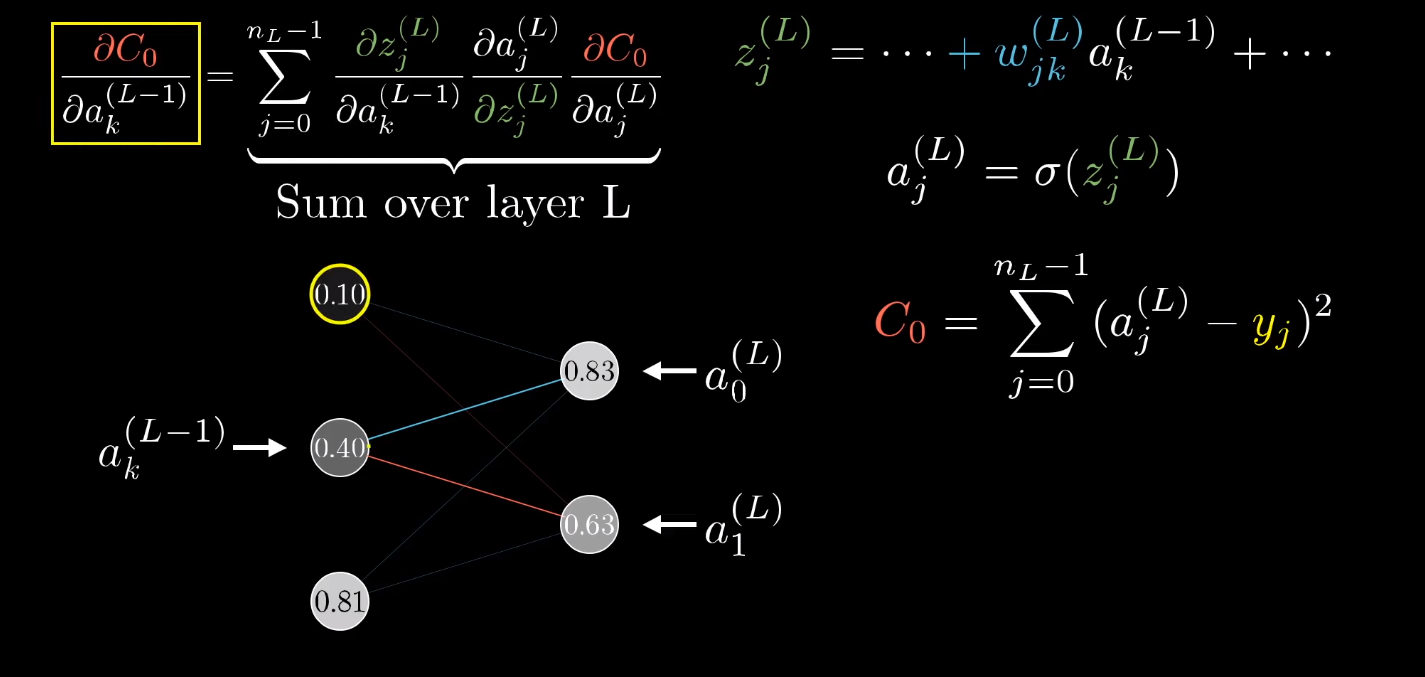

With multiple layers and neurons:

Calculating the gradient

Formula for the hidden layer 1:

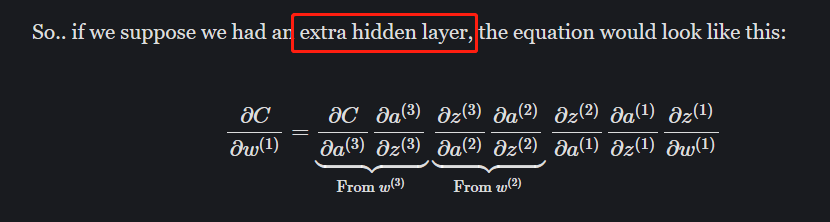

Extra hidden layer:

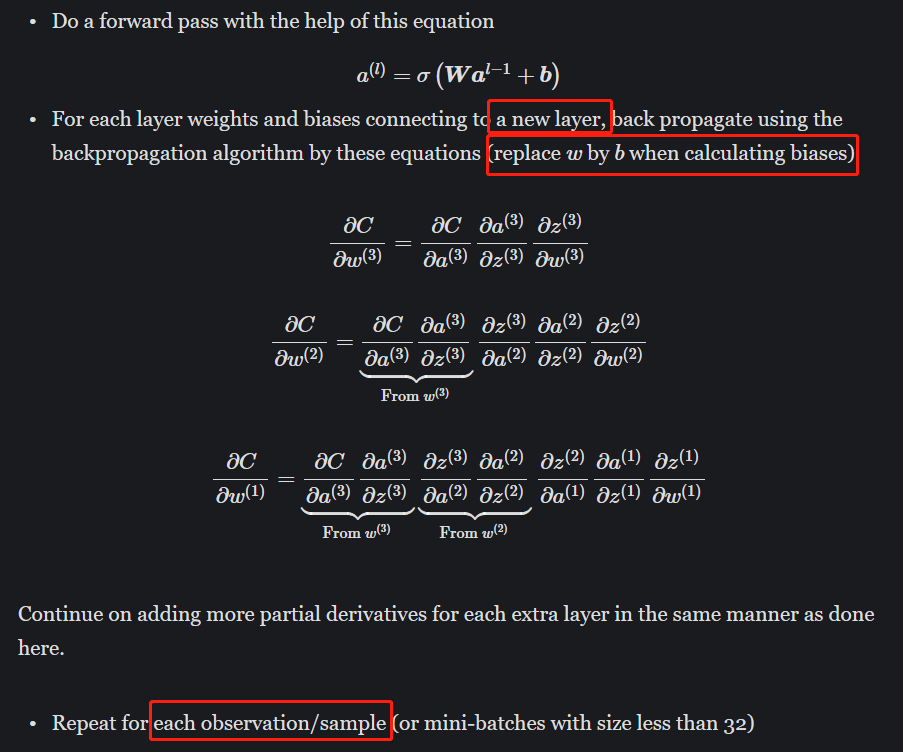

Summarization

Implementation

Multi-layer Perceptron with Backpropagation

Resources:

backpropagation ideas and formula